As the landscape of cyber threats continues to evolve, organizations are increasingly turning to artificial intelligence (AI) to bolster their cyber defense strategies. Here, we talk the strategies… and risks… of adopting AI-driven solutions in cybersecurity.

In an era dominated by digital interactions, cybersecurity has become a critical concern for organizations worldwide, from national security to critical infrastructure to commercial enterprises. Adversary nation-states, transnational criminal enterprises, and others attack targets every minute of every day. At the end of 2022, financial institutions and insurance organizations experienced more than 254 million leaked records. According to the Wall Street Journal, denial of service attacks against banks increased by 154% in 2023.

With the increasing sophistication of cyber threats, both internal and external (see Table 1), and the number of players in the cyber adversarial space, traditional defense mechanisms often fall short of providing adequate long-term protection. In response, many organizations are exploring the potential of artificial intelligence (AI) to enhance their cyber defense capabilities.

| Targets | Attacks |

|---|---|

| Sensors, actuators, robots, and field devices | Application protocol attacks, Response and Measurement Injection Attacks, Time delay attacks |

| Industrial control systems (ICS) | Spoofing attacks, False sequential logic attacks, Deception attacks and False Data Injection attacks, DDoS attacks, Zero-Day attacks, Phishing and Spear Phishing attacks, Ransomware |

| Cyber-physical systems (CPS) | Attacks Against Machine Learning and Data Analytics, Poisoning and Evasion attacks, Advanced Persistent Threats, Man-in-the-Middle attacks, Eavesdropping attacks, and Information Modification |

History is rife with hard-core examples of data breaches that include several hundred million individual data files. But how did these compromises occur? How did we allow these breaches of security to happen? Technology is a competitive differentiator to counter the attacks. We need technology to move forward — even ahead of our ability to secure what it intends to protect. Herein lies part of the problem.

We rely on technology not only to be better than the competition but also to protect assets, a mission that has failed, time and again. First, we looked upon firewalls to protect data. Then cyber 101, passwords and identity management. Given this linear approach to data protection, do we run the same risk of assuming that AI will secure our data? Does this technology enhance our capabilities to erect human and technical protective shields against adversary attacks?

As powerful as AI is and will be in the future, can we rely on it to prevent intrusions and exfiltration of data? There is an old saying about managing data: garbage in, garbage out. We need to be very careful about whose AI we trust. Do we want AI from a company that builds AI for adversarial military purposes to secure our data with AI (eg: dual purpose Chinese providers)? AI reflects the efforts of human beings and technology. Given the potential for virtually unlimited power of AI in the future, we need to be the guardians of how we manage AI development, implementation, and maintenance. We need to be unyielding in defining and protecting the process by which AI is enabled and controlled.

Just because it is AI does not mean that it is worthy of our trust. Trust must be earned. The annals of history are filled with examples of violations of trust—between companies and governments.

Challenges and Risk

While AI offers significant potential in enhancing cybersecurity measures, its implementation also poses several dangers and challenges:

- Models Lack Contextual Understanding: AI algorithms may struggle to interpret complex contextual information, leading to false positives or missed detections.

- Adversarial Attacks: Cyber adversaries can exploit vulnerabilities in your AI systems, undermining their effectiveness and integrity by manipulating the data to confuse the AI system.

- Data Privacy Concerns: The use of AI in cyber defense could raise new concerns about data privacy and introduce issues in regulatory compliance that did not exist prior to the introduction of AI solutions, particularly regarding the handling of sensitive information and personal data.

- Bias and Discrimination: AI algorithms trained on biased or incomplete data may exhibit biased results and decisions, leading to discriminatory outcomes and false positives/negatives in cybersecurity tasks.

- Overreliance on AI: Excessive reliance on AI-driven solutions without humans in the loop can lead to complacency and lack of critical thinking, potentially missing indicators of cyber threats. Human-machine collaboration is essential to ensure the effectiveness and accountability of AI-enhanced security solutions.

What is AI cyber defense?

AI encompasses various technologies, including machine learning, natural language processing, and deep learning, which can be harnessed to detect, prevent, and respond to cyber threats. These systems analyze vast amounts of data to find patterns and anomalies, enabling proactive threat mitigation. AI cyber defense is large historic data-set analysis and automated real-world actions implemented on live data with continual updating and learning to counter a cyber threat that is doing the same. AI cyber defense looks to automate, train, and simulate threat detection, analysis, and capture – and then implement that learning in a live environment to prevent a system crash or a data leak. AI-driven solutions automate repetitive tasks, and improve overall efficiency in cyber defense operations, providing Enhanced Cyber Threat Detection, Real-time Incident Response, Scalability Monitoring, and Predictive Analytics – augmenting human capabilities.

AI Defense Analysis and Implementation:

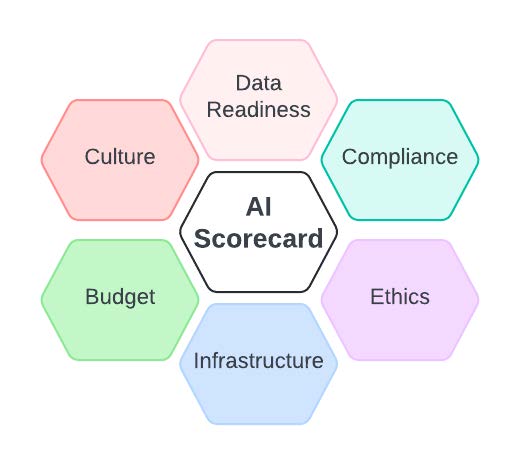

To effectively implement an AI-enhanced cyber defense strategy, enterprises must implement a series of initiatives and assessments. These measures encompass critical areas such as budget, infrastructure, strategy, data readiness, cultural transformation, compliance, and ethical considerations. You cannot lock down so much as to be non-functional, but in implementing AI cyber solutions, you need to know your infrastructure, your data, and your implementation challenges and processes to be successful. There is no magical effective solution without work upfront. Developing an AI Maturity Scorecard is one of the more effective ways to guarantee your AI maturity and readiness:

AI Maturity Scorecard

The FOUNDATION – DATA.

Being Ready. Start with the data.

Are you ready? – Data Readiness:

AI systems require vast amounts of data to train and function properly. Data is hard. It translates and integrates poorly. Acquisition, storage, and processing of this data require significant efforts to mitigate inherent privacy and security risks. Unauthorized access, breaches, and dissemination of sensitive information are all concerns for the enterprise contemplating AI.

Data Maturity Assessment

What do you know about your data? How do you store it? Are you using relational databases or data lakes? Other storage solutions? In the cloud or on-prem? Are you digitizing all your data sources? Do you have a robust and scalable infrastructure to accommodate the volume and variety of data you anticipate handling in the future? Are you effectively warehousing your data in alignment with strategic objectives? Are you ensuring representation from diverse user groups – differing types of anticipated data? Are you maintaining data logs?

Cultural and Skills Readiness Assessment

Attention and resources must be allocated to ensure that the right people with the right training or skillsets exist to manage data and an AI solution implementation, empowering them to identify opportunities for successful AI initiatives while fostering a culture that embraces educated risk-taking, experimentation, and innovation.

The success of AI projects depends on the effective collaboration between data scientists, engineers, domain experts and business stakeholders. Consider embedding analysts within operational business units to facilitate training and build feedback loops to reinforce the iterative nature of all AI initiatives.

AI Infrastructure readiness

Central to AI readiness is the standardization of AI Infrastructure and tools across the organization, fostering consistency, scalability, interoperability, reproducibility, security, and interpretability. Scalability is central to ensuring that your infrastructure can scale to handle growing data volumes and inherently large computational requirements as AI initiatives expand.

Budgeting

Budgetary and financial planning should exceed just considerations of the acquisition of the AI defense products and encompass upfront costs of data infrastructure, talent acquisition and development, and other items discussed so far. Moreover, a standardized approach to ROI calculations, incorporating metrics such as LTV/NPV/TCO, coupled with continuous monitoring procedures will facilitate a high-quality forward-looking allocation of resources in alignment with strategic priorities.

Integration and Implementation

The successful implementation of AI defense technologies first necessitates the identification and evaluation of AI readiness gaps. Thorough mapping of business processes and active challenge of every node and edge in your business flow chart simplifies the search for vulnerabilities and eventually enhances defenses against the attacks on these vulnerability gaps. Small-scale pilot projects aimed at hi-impact AI initiatives serve as a valuable sandbox for future defense implementations, especially if supported by a robust effectiveness measurement system.

Ethical considerations

The deployment of AI in cyber defense necessitates careful consideration of ethical implications and privacy concerns. Organizations must develop and implement AI ethics guidelines, responsible AI practices, and accountability standards to ensure that AI systems are designed and deployed responsibly and with the proper accounting for potential social, ethical, and legal implications. The likely strengthening of AI laws and regulations in the US and Europe will require additional organizational investments in strengthening or enhancing of internal ethical guidelines and support infrastructure.

Conclusion:

AI represents the next great challenge and opportunity. AI right now possesses the buzz factor; it is that glittery object that many place excessive trust in. The risk is that industry and government want to move quickly to avoid losing competitive positioning. At the same time, we know that we must be cautious in assigning too great a reliance upon technology. Creating a trust framework, using many of the existing ones, must be accomplished. While AI is a global technology, we need to be clear: it can and will be used defensively and offensively. We must establish robust international regulations and cooperative agreements to manage AI’s development and deployment responsibly, ensuring that its use aligns with global security and ethical standards. It starts at your organization.

- Antonio João Gonçalves de Azambuja et al. Artificial Intelligence-Based Cyber Security in the Context of Industry 4.0—A Survey. Electronics 2023, 12, 1920. https://doi.org/10.3390/electronics12081920